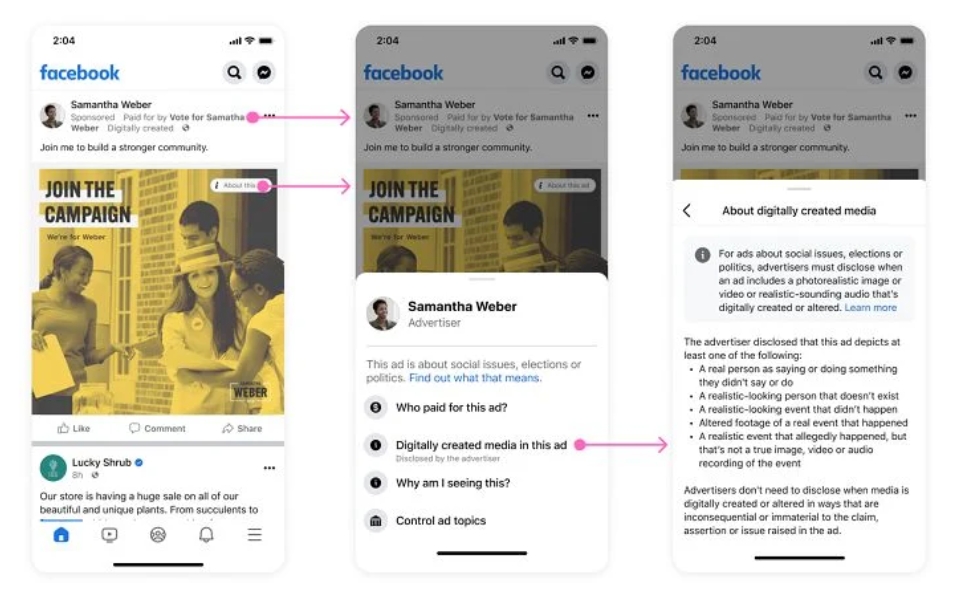

Meta has officially initiated its new disclosure requirements for digitally altered political ads to combat the rising use of generative AI in political campaigns. The move is designed to enhance transparency and authenticity in online content, particularly in the context of elections and political discourse.

Key Points:

1. Meta’s disclosure rules, announced in November, are now in effect for all advertisers dealing with social issues, elections, or politics.

2. Advertisers must explicitly state if their content contains AI-generated or digitally altered elements, such as images, videos, or realistic-sounding audio.

3. The implementation includes a checkbox during ad setup within selected categories, ensuring accountability for advertisers.

4. This step aligns with similar efforts by platforms like YouTube and TikTok, which have introduced tags for AI-generated content, promoting transparency for viewers.

Addressing Risks:

1. Meta acknowledges the potential for misleading promotions by political advertisers, especially in the lead-up to elections.

2. To mitigate this, Meta has instituted a political ads blackout period a week before elections, allowing for the verification and debunking of false claims before voting occurs.

Ongoing Monitoring and Updates:

1. Advertisers failing to comply with the new disclosure requirements risk ad removal and potential bans.

2. Meta allows advertisers to update previously launched campaigns to incorporate the new AI disclosure tag, emphasizing the platform’s commitment to adapting to evolving challenges.

Meta’s proactive measures in enforcing transparency with AI-generated political ads set a precedent for the industry. As AI tools advance, continued vigilance is crucial to preserving the integrity of political discourse and elections. The effectiveness of these rules will be closely observed in the evolving digital advertising landscape.