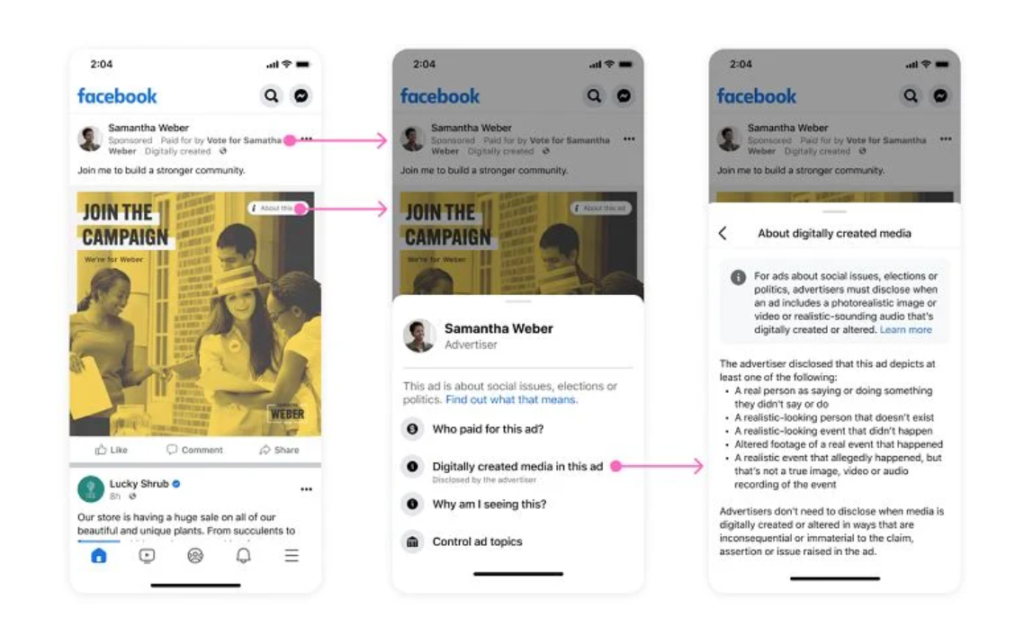

Meta, the parent company of Facebook, has rolled out new disclosure requirements for digitally altered political ads to address the rise of AI-generated content. These measures, effective immediately, aim to enhance transparency in political advertising, especially with the approaching U.S. Election campaign.

Policy Overview:

Announced last November, Meta’s policy demands advertisers in social, election, and political categories to explicitly state if their ads contain digitally altered content, whether images, videos, or audio, generated by AI or other methods.

Preventing Deception:

In response to the advancing capabilities of AI tools, Meta joins platforms like YouTube and TikTok in discouraging deceptive practices through tags for AI-generated content. Advertisers now face a checkbox during ad setup to comply with the new disclosure requirements.

Implications for Politics:

To minimize the risk of misleading promotions before potential bans, Meta enforces a blackout period a week before elections. This period allows for fact-checking and debunking false claims or manipulated content before voters cast their ballots.

Ongoing Monitoring:

Meta emphasizes continuous monitoring and enforcement, requiring advertisers to indicate digital alterations or face ad removal and potential bans. Advertisers are also encouraged to update previous campaigns with the new AI disclosure tag.

Meta’s proactive approach to AI-generated content in political ads sets a precedent for responsible content dissemination. By prioritizing transparency and accountability, Meta aims to ensure an authentic online experience, especially in critical areas like political discourse. As AI’s influence continues to grow, these measures represent a crucial step in navigating the intersection of technology and trustworthy content dissemination on digital platforms.